Azure Signalr Service Is Not Connected Yet Please Try Again Later

This browser is no longer supported.

Upgrade to Microsoft Edge to take reward of the latest features, security updates, and technical back up.

Troubleshooting guide for Azure SignalR Service common issues

This guidance is to provide useful troubleshooting guide based on the mutual bug customers met and resolved in the past years.

Access token too long

Possible errors

- Client-side

ERR_CONNECTION_ - 414 URI Too Long

- 413 Payload As well Big

- Access Token must not be longer than 4K. 413 Request Entity Also Large

Root crusade

For HTTP/2, the max length for a single header is 4 K, and then if using browser to access Azure service, in that location will be an error ERR_CONNECTION_ for this limitation.

For HTTP/1.i, or C# clients, the max URI length is 12 K, the max header length is xvi G.

With SDK version 1.0.6 or higher, /negotiate will throw 413 Payload Too Large when the generated admission token is larger than iv K.

Solution

By default, claims from context.User.Claims are included when generating JWT access token to ASRS(Azure SignalR Southervice), so that the claims are preserved and tin be passed from ASRS to the Hub when the customer connects to the Hub.

In some cases, context.User.Claims are used to shop lots of information for app server, most of which are not used by Hubsouth only past other components.

The generated access token is passed through the network, and for WebSocket/SSE connections, access tokens are passed through query strings. And then equally the all-time practise, we advise only passing necessary claims from the customer through ASRS to your app server when the Hub needs.

There is a ClaimsProvider for you to customize the claims passing to ASRS inside the access token.

For ASP.NET Core:

services.AddSignalR() .AddAzureSignalR(options => { // pick upwardly necessary claims options.ClaimsProvider = context => context.User.Claims.Where(...); }); For ASP.Cyberspace:

services.MapAzureSignalR(GetType().FullName, options => { // pick up necessary claims options.ClaimsProvider = context.Authentication?.User.Claims.Where(...); }); Having issues or feedback near the troubleshooting? Let united states know.

TLS 1.2 required

Possible errors

- ASP.NET "No server available" error #279

- ASP.Net "The connectedness is non active, data cannot be sent to the service." error #324

- "An error occurred while making the HTTP request to

https://<API endpoint>. This error could be because the server certificate is non configured properly with HTTP.SYS in the HTTPS case. This error could too be acquired by a mismatch of the security binding between the client and the server."

Root cause

Azure Service simply supports TLS1.2 for security concerns. With .Net framework, information technology is possible that TLS1.2 is not the default protocol. As a result, the server connections to ASRS cannot be successfully established.

Troubleshooting guide

-

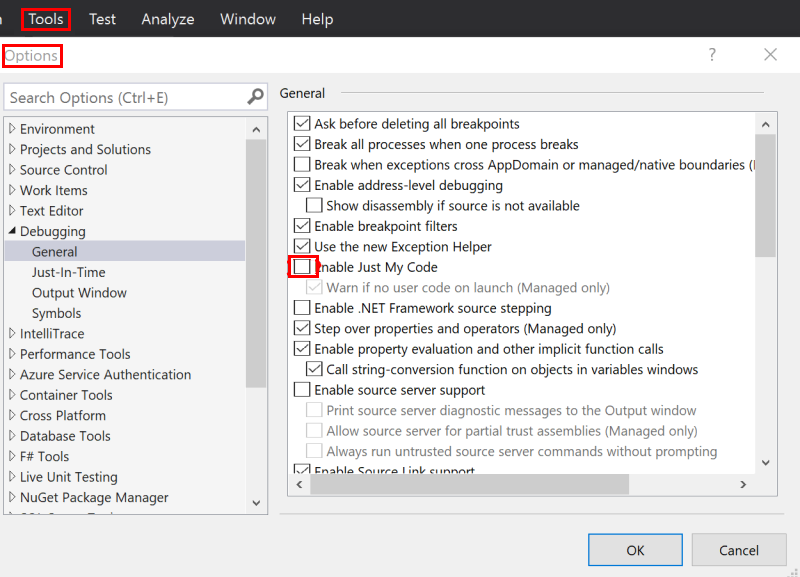

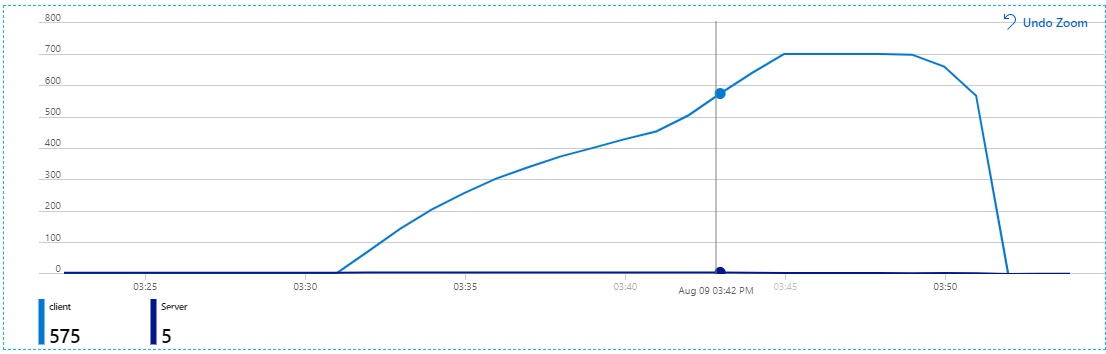

If this error can be reproduced locally, uncheck Just My Lawmaking and throw all CLR exceptions and debug the app server locally to run into what exception throws.

-

Uncheck Just My Lawmaking

-

Throw CLR exceptions

-

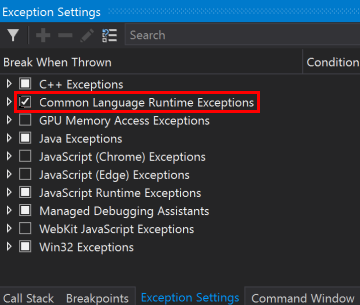

See the exceptions throw when debugging the app server-side code:

-

-

For ASP.NET ones, you can also add post-obit lawmaking to your

Startup.csto enable detailed trace and run across the errors from the log.app.MapAzureSignalR(this.GetType().FullName); // Make sure this switch is called subsequently MapAzureSignalR GlobalHost.TraceManager.Switch.Level = SourceLevels.Information;

Solution

Add following code to your Startup:

ServicePointManager.SecurityProtocol = SecurityProtocolType.Tls12; Having bug or feedback well-nigh the troubleshooting? Allow usa know.

400 Bad Request returned for client requests

Root crusade

Check if your client request has multiple hub query strings. hub is a preserved query parameter and 400 will throw if the service detects more than than ane hub in the query.

Having issues or feedback near the troubleshooting? Allow the states know.

401 Unauthorized returned for customer requests

Root cause

Currently the default value of JWT token's lifetime is i hour.

For ASP.NET Cadre SignalR, when information technology is using WebSocket transport type, it is OK.

For ASP.Net Core SignalR'due south other transport type, SSE and long-polling, this means by default the connection can at most persist for 1 hour.

For ASP.NET SignalR, the client sends a /ping KeepAlive request to the service from time to time, when the /ping fails, the client aborts the connection and never reconnect. This ways, for ASP.NET SignalR, the default token lifetime makes the connection lasts for at most i hr for all the transport type.

Solution

For security concerns, extend TTL is not encouraged. We suggest adding reconnect logic from the client to restart the connection when such 401 occurs. When the customer restarts the connection, it will negotiate with app server to get the JWT token again and get a renewed token.

Check here for how to restart customer connections.

Having issues or feedback virtually the troubleshooting? Permit us know.

404 returned for customer requests

For a SignalR persistent connection, it first /negotiate to Azure SignalR service and then establishes the real connection to Azure SignalR service.

Troubleshooting guide

- Following How to view approachable requests to get the asking from the client to the service.

- Bank check the URL of the request when 404 occurs. If the URL is targeting to your web app, and like to

{your_web_app}/hubs/{hubName}, bank check if the clientSkipNegotiationistruthful. When using Azure SignalR, the client receives redirect URL when it kickoff negotiates with the app server. The client should NOT skip negotiation when using Azure SignalR. - Another 404 can happen when the connect request is handled more than 5 seconds after

/negotiateis called. Bank check the timestamp of the client request, and open an event to united states of america if the request to the service has a boring response.

Having bug or feedback well-nigh the troubleshooting? Let united states of america know.

404 returned for ASP.Internet SignalR's reconnect request

For ASP.NET SignalR, when the customer connection drops, it reconnects using the aforementioned connectionId for three times before stopping the connection. /reconnect can help if the connection is dropped due to network intermittent issues that /reconnect tin can reestablish the persistent connection successfully. Under other circumstances, for case, the client connection is dropped due to the routed server connection is dropped, or SignalR Service has some internal errors like case restart/failover/deployment, the connexion no longer exists, thus /reconnect returns 404. It is the expected behavior for /reconnect and later on iii times retry the connection stops. We propose having connection restart logic when connectedness stops.

Having bug or feedback about the troubleshooting? Permit us know.

429 (Likewise Many Requests) returned for client requests

There are 2 cases.

Concurrent connection count exceeds limit

For Complimentary instances, Concurrent connection count limit is twenty For Standard instances, concurrent connexion count limit per unit is 1 K, which means Unit100 allows 100-K concurrent connections.

The connections include both customer and server connections. check hither for how connections are counted.

Too many negotiate requests at the same fourth dimension

Nosotros suggest having a random delay before reconnecting, cheque here for retry samples.

Having bug or feedback well-nigh the troubleshooting? Let usa know.

500 Error when negotiate: Azure SignalR Service is not connected yet, please endeavor again later

Root cause

This mistake is reported when there is no server connection to Azure SignalR Service connected.

Troubleshooting guide

Enable server-side trace to find out the mistake details when the server tries to connect to Azure SignalR Service.

Enable server-side logging for ASP.NET Core SignalR

Server-side logging for ASP.NET Cadre SignalR integrates with the ILogger based logging provided in the ASP.NET Cadre framework. You can enable server-side logging past using ConfigureLogging, a sample usage every bit follows:

.ConfigureLogging((hostingContext, logging) => { logging.AddConsole(); logging.AddDebug(); }) Logger categories for Azure SignalR ever start with Microsoft.Azure.SignalR. To enable detailed logs from Azure SignalR, configure the preceding prefixes to Debug level in your appsettings.json file similar below:

{ "Logging": { "LogLevel": { ... "Microsoft.Azure.SignalR": "Debug", ... } } } Enable server-side traces for ASP.NET SignalR

When using SDK version >= 1.0.0, you can enable traces by adding the post-obit to web.config: (Details)

<organization.diagnostics> <sources> <source name="Microsoft.Azure.SignalR" switchName="SignalRSwitch"> <listeners> <add proper name="ASRS" /> </listeners> </source> </sources> <!-- Sets the trace verbosity level --> <switches> <add name="SignalRSwitch" value="Data" /> </switches> <!-- Specifies the trace writer for output --> <sharedListeners> <add proper name="ASRS" blazon="System.Diagnostics.TextWriterTraceListener" initializeData="asrs.log.txt" /> </sharedListeners> <trace autoflush="truthful" /> </arrangement.diagnostics> Having issues or feedback about the troubleshooting? Let us know.

Customer connection drops

When the client is connected to the Azure SignalR, the persistent connection between the client and Azure SignalR can sometimes driblet for unlike reasons. This department describes several possibilities causing such connection drop and provides some guidance on how to place the root cause.

Possible errors seen from the client side

-

The remote political party closed the WebSocket connection without completing the close handshake -

Service timeout. 30.00ms elapsed without receiving a message from service. -

{"blazon":7,"mistake":"Connection closed with an error."} -

{"type":seven,"error":"Internal server fault."}

Root crusade

Client connections can drop under various circumstances:

- When

Hubthrows exceptions with the incoming request. - When the server connection, which the client routed to, drops, see below section for details on server connection drops.

- When a network connectivity issue happens between client and SignalR Service.

- When SignalR Service has some internal errors like case restart, failover, deployment, and and so on.

Troubleshooting guide

- Open app server-side log to run into if anything abnormal took place

- Bank check app server-side event log to see if the app server restarted

- Create an outcome to usa providing the fourth dimension frame, and email the resources proper name to us

Having issues or feedback virtually the troubleshooting? Let the states know.

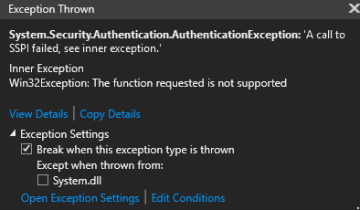

Client connection increases constantly

It might be caused past improper usage of client connectedness. If someone forgets to terminate/dispose SignalR customer, the connexion remains open.

Possible errors seen from the SignalR'due south metrics that is in Monitoring section of Azure portal resource menu

Client connections rising constantly for a long time in Azure SignalR'south Metrics.

Root cause

SignalR client connection'due south DisposeAsync never be called, the connection keeps open.

Troubleshooting guide

Check if the SignalR client never closes.

Solution

Check if you lot shut connection. Manually telephone call HubConnection.DisposeAsync() to stop the connection later on using it.

For instance:

var connectedness = new HubConnectionBuilder() .WithUrl(...) .Build(); effort { expect connexion.StartAsync(); // Practise your stuff await connection.StopAsync(); } finally { expect connection.DisposeAsync(); } Common improper customer connexion usage

Azure Function example

This issue often occurs when someone establishes SignalR client connection in Azure Role method instead of making it a static member to your Role class. Y'all might wait merely ane customer connexion is established, but you see client connection count increases constantly in Metrics that is in Monitoring section of Azure portal resource card, all these connections driblet only after the Azure Function or Azure SignalR service restarts. This is considering for each request, Azure Part creates 1 customer connexion, if you don't stop client connection in Function method, the client keeps the connections live to Azure SignalR service.

Solution

- Remember to close client connection if yous use SignalR clients in Azure function or use SignalR customer equally a singleton.

- Instead of using SignalR clients in Azure role, you can create SignalR clients anywhere else and use Azure Functions Bindings for Azure SignalR Service to negotiate the customer to Azure SignalR. And you can as well utilise the binding to transport messages. Samples to negotiate customer and ship messages can be found here. Further data can exist found here.

- When y'all use SignalR clients in Azure office, there might exist a better compages to your scenario. Check if y'all blueprint a proper serverless architecture. You can refer to Real-time serverless applications with the SignalR Service bindings in Azure Functions.

Having issues or feedback about the troubleshooting? Let us know.

Server connexion drops

When the app server starts, in the groundwork, the Azure SDK starts to initiate server connections to the remote Azure SignalR. Every bit described in Internals of Azure SignalR Service, Azure SignalR routes incoming client traffics to these server connections. Once a server connectedness is dropped, all the client connections it serves will be closed too.

Every bit the connections between the app server and SignalR Service are persistent connections, they may experience network connectivity bug. In the Server SDK, nosotros have Always Reconnect strategy to server connections. Every bit the best practice, we also encourage users to add continuous reconnect logic to the clients with a random delay time to avert massive simultaneous requests to the server.

On a regular basis, there are new version releases for the Azure SignalR Service, and sometimes the Azure-wide OS patching or upgrades or occasionally interruption from our dependent services. These may bring in a brusque menses of service disruption, but as long every bit client-side has the disconnect/reconnect mechanism, the bear upon is minimal like whatever client-side caused disconnect-reconnect.

This section describes several possibilities leading to server connection drib, and provides some guidance on how to identify the root cause.

Possible errors seen from the server side

-

[Error]Connection "..." to the service was dropped -

The remote political party closed the WebSocket connectedness without completing the close handshake -

Service timeout. 30.00ms elapsed without receiving a message from service.

Root cause

Server-service connection is airtight by ASRS(Azure SignalR Southervice).

For ping timeout, it might be caused by high CPU usage or thread pool starvation on the server side.

For ASP.Cyberspace SignalR, a known issue was stock-still in SDK 1.6.0. Upgrade your SDK to newest version.

Thread pool starvation

If your server is starving, that means no threads are working on bulletin processing. All threads are not responding in a sure method.

Commonly, this scenario is caused by async over sync or by Task.Event/Task.Await() in async methods.

See ASP.Cyberspace Cadre performance best practices.

See more about thread pool starvation.

How to detect thread puddle starvation

Check your thread count. If there are no spikes at that time, take these steps:

-

If you're using Azure App Service, check the thread count in metrics. Cheque the

Maxaggregation:

-

If you lot're using the .NET Framework, you tin find metrics in the functioning monitor in your server VM.

-

If you lot're using .Net Core in a container, see Collect diagnostics in containers.

You likewise can use lawmaking to detect thread pool starvation:

public class ThreadPoolStarvationDetector : EventListener { private const int EventIdForThreadPoolWorkerThreadAdjustmentAdjustment = 55; private const uint ReasonForStarvation = half-dozen; private readonly ILogger<ThreadPoolStarvationDetector> _logger; public ThreadPoolStarvationDetector(ILogger<ThreadPoolStarvationDetector> logger) { _logger = logger; } protected override void OnEventSourceCreated(EventSource eventSource) { if (eventSource.Proper name == "Microsoft-Windows-DotNETRuntime") { EnableEvents(eventSource, EventLevel.Advisory, EventKeywords.All); } } protected override void OnEventWritten(EventWrittenEventArgs eventData) { // See: https://docs.microsoft.com/en-united states of america/dotnet/framework/operation/thread-puddle-etw-events#threadpoolworkerthreadadjustmentadjustment if (eventData.EventId == EventIdForThreadPoolWorkerThreadAdjustmentAdjustment && eventData.Payload[3] equally uint? == ReasonForStarvation) { _logger.LogWarning("Thread pool starvation detected!"); } } } Add it to your service:

service.AddSingleton<ThreadPoolStarvationDetector>(); And so, check your log when the server connection is asunder past ping timeout.

How to find the root cause of thread pool starvation

To observe the root cause of thread puddle starvation:

- Dump the memory, and and so analyze the call stack. For more data, meet Collect and analyze memory dumps.

- Use clrmd to dump the memory when thread puddle starvation is detected. And then, log the call stack.

Troubleshooting guide

- Open the app server-side log to run into if annihilation abnormal took identify.

- Bank check the app server-side upshot log to come across if the app server restarted.

- Create an effect. Provide the time frame, and e-mail the resource name to u.s.a..

Having issues or feedback about the troubleshooting? Let us know.

Tips

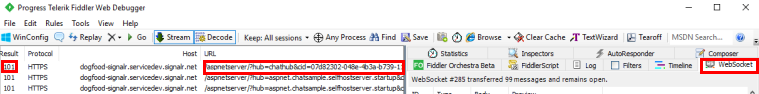

How to view the outgoing request from the client?

Accept ASP.NET Cadre one for example (ASP.NET one is like):

-

From browser: Have Chrome as an example, you tin use F12 to open the panel window, and switch to Network tab. You might demand to refresh the page using F5 to capture the network from the very outset.

-

From C# client:

You can view local web traffics using Fiddler. WebSocket traffics are supported since Fiddler iv.5.

How to restart client connectedness?

Here are the Sample codes containing restarting connectedness logic with Always RETRY strategy:

-

ASP.NET Core C# Client

-

ASP.NET Core JavaScript Customer

-

ASP.NET C# Client

-

ASP.NET JavaScript Customer

Having issues or feedback about the troubleshooting? Let u.s. know.

Next steps

In this guide, you lot learned about how to handle the common issues. Yous could too learn more generic troubleshooting methods.

Feedback

Submit and view feedback for

Source: https://docs.microsoft.com/en-us/azure/azure-signalr/signalr-howto-troubleshoot-guide

0 Response to "Azure Signalr Service Is Not Connected Yet Please Try Again Later"

Post a Comment